In an increasingly digitized world, Artificial Intelligence is making remarkable strides in transforming human lives. One of the most interesting applications would be AI chatbots for companionship. These intelligent digital entities aim to provide emotional support, imitate human conversations, and even foster connections in the absence of human interaction.

Understanding AI Chatbots for Companionship

AI chatbots for companionship are virtual assistants programmed to engage users in personalized and emotional ways. Like general-purpose bots, these chatbots model conversations, recognize emotions, and respond with empathy.

Examples of such chatbots include Replika, Woebot, and others that help users with loneliness, mental health, or just have fun conversations.

Features of AI Chatbots for Companionship

Natural Language Processing (NLP): AI chatbots learn human language through advanced NLP algorithms. This allows fluid, context-aware discussions.

Emotion Recognition: Some chatbots interpret emotion analysis to determine what to say to the user based on their sentiments. They might for example say something comforting when someone expresses sadness.

Customization: These chatbots let people customize their interactions by making the bot their own, tailoring the bot’s personality, tone, and even interests to align with their preferences.

24/7 Availability: Unlike human companions, AI chatbots are available 24/7 and can help at any time and place.

Ethical Considerations and Challenges in AI-Based Companionship

Transparency: Developers must make users aware they are talking to an AI chatbot and not a human. Trolling users can damage trust and violate ethics.

Limited Empathy: AI chatbots lack emotional understanding despite advancements. Their empathetic responses are programmed and may seem artificial in some situations.

Dependency Risks: A prolonged reliance on AI companionship might reduce human interaction and increase social isolation rather than decrease it.

Privacy Concerns: AI chatbots need user data for personalization, raising concern about data security and potential misuse.

Cultural Sensitivity and Inclusiveness: AI chatbots for companionship should consider diverse cultural, linguistic, and emotional needs when responding to users, and should be biased in no way. Developers have to make chatbots culturally sensitive and inclusive in their responses.

Ethical Dilemmas: Potential ethical questions regarding emotional exploitation and developer responsibilities arise as users develop unrealistic attachments to AI chatbots.

Regulation and Accountability: More sophisticated AI chatbots will require clear regulations on who can hold developers accountable for misuse or harm of their creations.

AI Chatbots for Companionship Applications

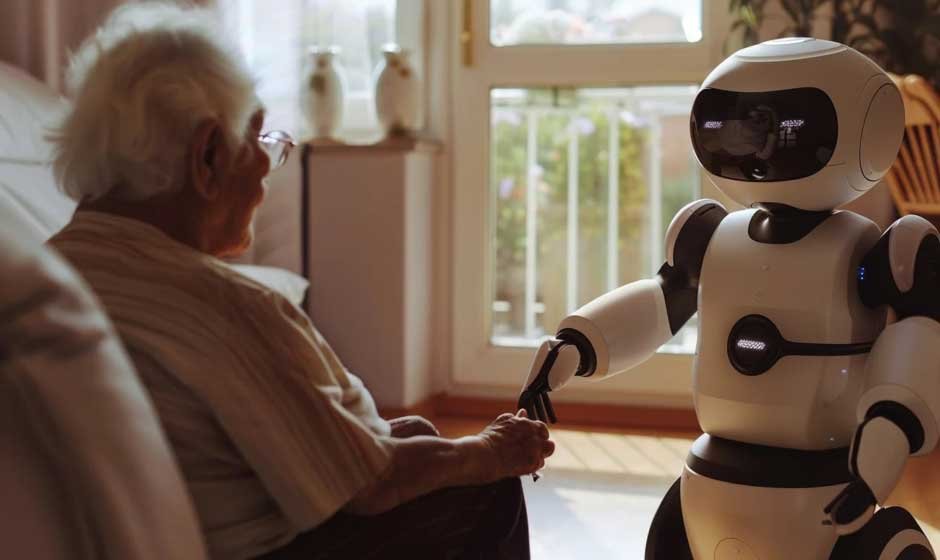

Elderly Care: AI chatbots may help elderly people live independently. They may even help with reminders for medications and appointments.

Mental Health Assistance: Some chatbots go beyond companionship to support mental health by having therapeutic conversations and mood tracking with users.

Education and Coaching: Chatbots could be study buddies providing personalized learning support or life coaches motivating users to succeed.

Conclusion

AI chatbots for companionship bring together technology and people. By leveraging professional AI chatbot development services, businesses can create intelligent, responsive, and user-friendly chatbots that enhance digital interactions and provide valuable companionship solutions. They cannot replace human relationships, but are a useful supplement, offering support, entertainment, and conversation in an increasingly isolated world. By addressing challenges and ethical concerns, developers can refine these tools to enrich lives and create real connections in the digital age.